Basic GPU Optimisation

A look at optimising Matrix Multiplication on a GPU.

There are many different types of GPUs out there and even more frameworks built to run code on them.

Today we will explore using GPUs for matrix multiplication, starting with the basics and moving toward a more optimised kernel.

For this experiment, I'm using CUDA on a Jetson Nano 4GB dev board.

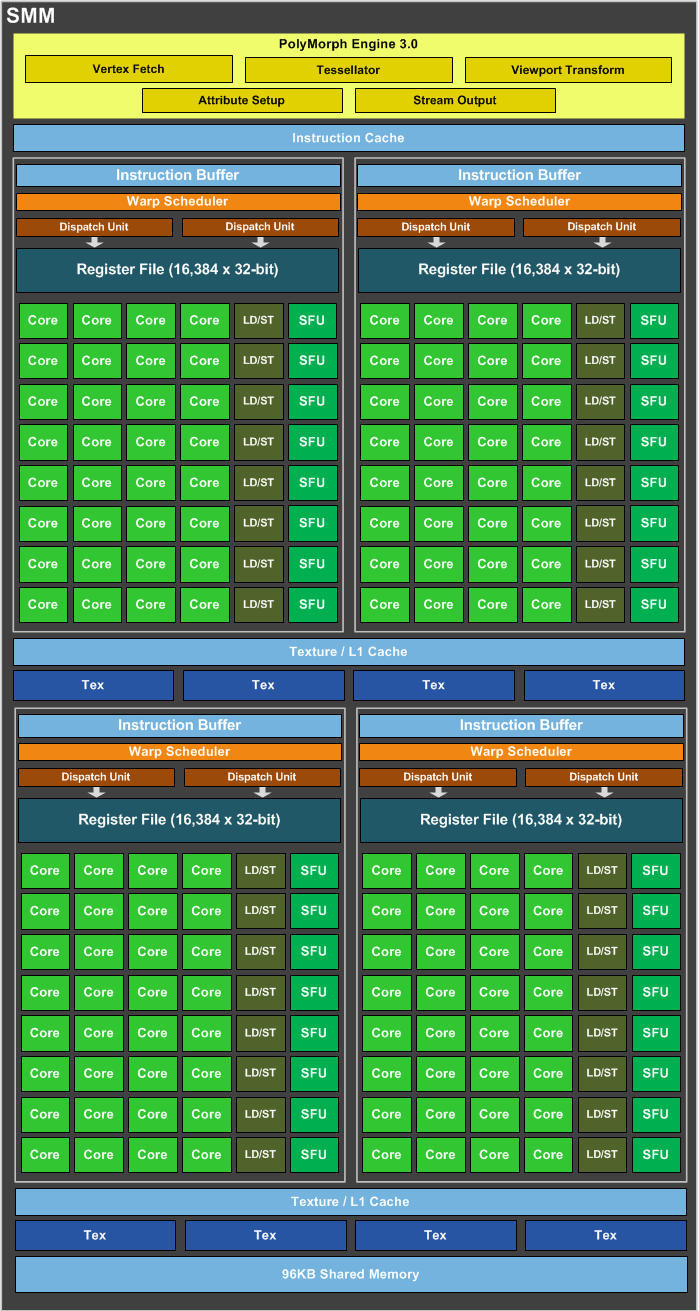

The Jetson Nano is essentially a cut down version of the GPU used in the Nintendo Switch, featuring just 128 CUDA cores and built using the Maxwell architecture.

Naive Kernel

We'll start with a simple naive matrix multiplication kernel. This serves as our baseline for performance testing.

Our performance metric will be GFLOPs.

According to the Maxwell wiki page we can estimate the theoretical peak performance as:

Formula:

2 x (number of CUDA cores) x (core clock speed in Hz)

With default clocks (921.6 MHz):

2 x 128 x 0.9216 = 236 GFLOPs FP32

With MAX-N clocks (1.479 GHz):

2 x 128 x 1.479 = 379 GFLOPs FP32

This calculation simply ignores the affect of Memory Bandwidth and therefore will be completely Theoretical.

For peak realworld we are going to use the preoptimised kernels provided by cuBLAS. With these kernels we can reach:

Execution time: 0.980652 seconds

Average GFLOPS: 175.188

Now with expectations in place we can begin.

Below is a naive kernel, it is a function that will be run on the GPU.

It multiplies A by B and stores the output in C.

This computation is done in parallel.

__global__ void matrix_multiply(const float* A, const float* B, float* C,

uint M, uint N, uint P) {

uint i = blockIdx.y * blockDim.y + threadIdx.y;

uint j = blockIdx.x * blockDim.x + threadIdx.x;

if (i >= M || j >= P) return;

float sum = 0.0f;

for (uint k = 0; k < N; ++k) {

sum += A[i * N + k] * B[k * P + j];

}

C[i * P + j] = sum;

}

The output of this kernel is:

Execution time: 13.8172 seconds

Average GFLOPS: 12.4336

Profiling

10x slower than cublas.

So why is this slow?

nvprof to the rescue.

Here we can see that we are computationally bound by our kernel not the intial memory transfers:

Type Time(%) Time Calls Avg Min Max Name

GPU activities: 99.52% 15.5148s 11 1.41044s 1.39188s 1.55823s matrix_multiply(float const *, float const *, float*, unsigned int, unsigned int, unsigned int)

0.35% 53.967ms 2 26.983ms 24.185ms 29.782ms [CUDA memcpy HtoD]

0.13% 20.768ms 1 20.768ms 20.768ms 20.768ms [CUDA memcpy DtoH]

API calls: 97.09% 15.5216s 11 1.41105s 1.39320s 1.55981s cudaDeviceSynchronize

2.18% 348.64ms 1 348.64ms 348.64ms 348.64ms cudaDeviceSetLimit

0.50% 80.323ms 3 26.774ms 22.138ms 29.474ms cudaMemcpy

0.18% 29.480ms 3 9.8265ms 9.7718ms 9.8927ms cudaMalloc

0.04% 5.7551ms 11 523.19us 99.637us 1.4802ms cudaLaunchKernel

0.01% 972.73us 3 324.24us 304.18us 350.11us cudaFree

0.00% 132.61us 97 1.3670us 729ns 34.064us cuDeviceGetAttribute

0.00% 38.959us 1 38.959us 38.959us 38.959us cudaGetDeviceProperties

0.00% 10.260us 1 10.260us 10.260us 10.260us cuDeviceTotalMem

0.00% 7.6560us 3 2.5520us 1.3020us 3.2810us cuDeviceGetCount

0.00% 3.9590us 2 1.9790us 1.6150us 2.3440us cuDeviceGet

0.00% 2.1870us 1 2.1870us 2.1870us 2.1870us cuDeviceGetName

0.00% 1.0940us 1 1.0940us 1.0940us 1.0940us cuDeviceGetUuid

Optimising with Tiling

So we need to improve the kernel.

The big problem with our kernel is that it accesses global memory every time it needs a value.

One of the ways to optimize around this is by using tiling.

This is the approach of "caching" global data in shared memory to avoid the cost repeatedly accessing global memory.

__global__ void matrix_multiply(const float *A, const float *B, float *C,

uint M, uint N, uint P)

{

uint i = blockIdx.y * blockDim.y + threadIdx.y;

uint j = blockIdx.x * blockDim.x + threadIdx.x;

if (i >= M || j >= P) return;

constexpr auto TILE 16;

__shared__ float tileA[TILE][TILE];

__shared__ float tileB[TILE][TILE];

float sum = 0.0f;

for (uint t = 0; t < (N + TILE - 1) / TILE; t++)

{

if (i < M && t * TILE + threadIdx.x < N)

tileA[threadIdx.y][threadIdx.x] = A[i * N + t * TILE + threadIdx.x];

else

tileA[threadIdx.y][threadIdx.x] = 0;

if (j < P && t * TILE + threadIdx.y < N)

tileB[threadIdx.y][threadIdx.x] = B[(t * TILE + threadIdx.y) * P + j];

else

tileB[threadIdx.y][threadIdx.x] = 0;

__syncthreads();

for (uint k = 0; k < TILE; k++)

sum += tileA[threadIdx.y][k] * tileB[k][threadIdx.x];

__syncthreads();

}

}

This one change brings an almost 5x speed up and highlights the importance of memory access patterns on GPU performance.

Execution time: 2.83375 seconds

Average GFLOPS: 60.6258

For now I'll leave this here, I recommend you experiment and research further.

Even with this result we are still far away from theoretical limit or even the cuBLAS result so still there is plenty of optimisation to go.

There are a ton of great resources availible out there e.g. CUDA C++ Best Practices Guide.